The way people search for information is evolving. LLM optimization (or LLMO for short) is transforming content marketing, shifting focus from traditional keyword-centric SEO to strategies that increase visibility within AI-generated answers from platforms like ChatGPT and Bing Chat.

Search behavior is shifting from traditional “search-and-click” to an “answer-first” approach.

This shift requires us to rethink our strategies and tailor our content to rank within AI-driven environments.

How’d you find this article?

Google? LLM?

Thought so.

Funny, isn’t it?

You’re reading an article on “how to rank in LLMs”

…because it ranked in an LLM.

I wrote it using Penfriend.ai.

You can too.

LLM Optimization Guide: How to Optimize Content for AI (2025)

In this extensive guide, I’m going to explain how you can show up in AI answers. You’ll also discover key differences between traditional SEO and LLM-focused optimization, proven on-page tactics (like concise answering, semantic keyword integration, and structured FAQ formatting), essential off-page methods (including strategic PR, Reddit engagement, and Wikipedia presence), and crucial technical optimizations (like effective schema markup).

Want all the deets but prefer listening to reading?

No problem, grab a listen right here:

What Are LLMs, and How Do They Handle Content?

Large Language Models (or LLMs) like ChatGPT and Gemini are AI systems designed to understand and generate human-like text. Unlike traditional search engines that rely on keyword matching and ranking algorithms, LLMs process content through semantic understanding, user intent, and contextual relevance.

Key Features of LLMs:

- Semantic Understanding: LLMs focus on the meaning behind words rather than exact keyword matches.

- Intent Matching: They prioritize answering the user’s query with precise and relevant information.

- Contextual Responses: LLMs can process multi-turn conversations and adjust their answers dynamically.

What is LLM Optimization Then?

LLM optimization, or LLMO, is the practice of tailoring your content and online presence so that AI language models (like ChatGPT, Bard, or Bing Chat) are more likely to feature or cite your content in their responses.

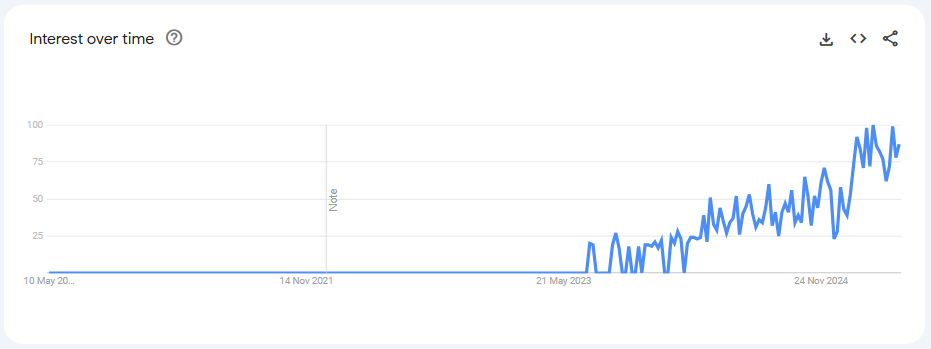

And interest is growing, FAST…

Key Differences Between SEO and LLM Optimization

The rise of LLM’s has introduced a new paradigm in content optimization so here I dive deeper into the fundamental differences between SEO and LLM optimization.

Focus: Search Results vs. Direct Answers

- SEO: The goal of SEO is to improve visibility on Search Engine Results Pages (SERPs). Users input a query, browse through search results, and decide which link to click.

- LLMO: The primary focus is to provide direct answers to user queries within the AI tool itself, often eliminating the need for users to visit external links.

Here’s an example:

- SEO: A search for “best email marketing tools” may return a list of articles ranked by relevance.

- LLM: The same query might yield a direct, conversational recommendation like “The top email marketing tools are Mailchimp, Constant Contact, and ActiveCampaign.“

Content Length: In-Depth vs. Concise

- SEO: Traditional SEO rewards long-form, in-depth content that covers topics comprehensively, often exceeding 2,000 words.

- LLMO: LLMs prioritize concise, to-the-point answers that address the query directly within a few sentences or a paragraph.

Here’s why it Matters:

LLMs process large volumes of data but aim to deliver summaries rather than exhaustive articles. Detailed content still plays a role in establishing authority, which LLMs use as part of their training data.

Content Structure: Scannable Pages vs. Conversational Tone

- SEO: Search engines favor content with clear formatting, including headings, bullet points, and lists, as it improves scannability for users.

- LLMO: While structure is still important, the tone and flow of the content must feel conversational and human. LLMs prefer language that mirrors how users naturally communicate.

Here’s my example:

- SEO: “Email marketing tools have several features, such as automation, analytics, and templates. Below are the top tools ranked by features.”

- LLM: “If you’re looking for email marketing tools, automation and analytics are key. Try tools like Mailchimp for a beginner-friendly experience.”

Ranking Factors: Traditional Metrics vs. Semantic Relevance

- SEO: Factors like backlinks, domain authority, and exact-match keywords heavily influence rankings.

- LLMO: LLMs prioritize semantic relevance, user intent, and the context of the information provided.

Our example:

- SEO: A page optimized with keywords like “best AI tools” multiple times may rank well.

- LLM: The AI prefers content that answers related semantic queries like “What are some practical uses for AI tools?” and “Which AI tools improve productivity?”

Intent Matching: Keyword-Driven vs. User-Focused

- SEO: Heavily relies on keywords to gauge intent and relevance.

- LLMO: Focuses on understanding user intent holistically, even when the phrasing differs.

Here’s why it Matters:

LLMs interpret intent beyond exact-match keywords. As an example, “How do I start with email automation?” and “Beginner’s guide to email marketing automation” are treated as equivalent by an LLM.

Engagement: Clicks and Traffic vs. Engagement Within the AI

- SEO: Success is often measured by metrics like organic traffic, click-through rates (CTR), and bounce rates.

- LLMO: Success hinges on whether the AI finds the content relevant enough to include in its responses or cite as a source.

Instead of optimizing solely for clicks, you need to focus on delivering authoritative, well-researched, and directly usable content that’s helpful to REAL humans.

Real-Time Adaptability: Static vs. Dynamic Updates

- SEO: Websites need regular updates to maintain rankings, but the impact can take weeks to show due to indexing delays.

- LLMO: LLMs pull data from real-time updates or recent training sets, making current, accurate information more critical than ever.

Penny’s Tip:

Make sure evergreen content is updated with the latest stats, trends, and citations to remain useful for LLM-generated answers.

Content Verification: Backlinks vs. Credibility

- SEO: Search engines value backlinks from high-authority websites as proof of credibility.

- LLMO: LLMs assess credibility based on the accuracy of claims, the presence of citations, and the overall consistency of the information with other reputable sources.

Best Practice:

Incorporate verifiable data and include citations to authoritative sources. For instance:

- “According to HubSpot, companies with blogs generate 55% more site traffic.”

User Experience: Search Exploration vs. Instant Gratification

- SEO: Users explore multiple links, gather information, and form conclusions.

- LLMO: Users receive direct answers, making the “first impression” of the content pivotal to its effectiveness.

Comparing Traditional SEO with LLM Optimization

Traditional SEO vs LLM Optimization

| Aspect | Traditional SEO | LLM Optimization |

|---|---|---|

| Goal | Improve SERP rankings | Provide direct answers within AI tools |

| Content Length | Long-form, detailed | Concise, to-the-point |

| Tone | Professional and structured | Conversational and natural |

| Ranking Factors | Backlinks, keywords, domain authority | Semantic relevance, accuracy, intent |

| Intent | Keyword-driven | Holistic understanding of user queries |

| Engagement | Click-through rates and traffic | AI tool inclusion and user satisfaction |

| Updates | Periodic content updates | Real-time accuracy and adaptability |

Optimizing for Agents: The Future of UX is AX

In the emerging Agentic Internet, humans increasingly rely on AI agents, powered by LLMs, to navigate and retrieve information on their behalf. This new paradigm demands a shift from optimizing for user experience (UX) to Agent Experience (AX). Unlike human users, agents prioritize:

- Structured data over visuals

- Semantic URLs and metadata

- Comparative content and listicles

- Fast-loading, JavaScript-light pages

The concept of AX focuses on optimizing not for human users, but for AI agents that fetch and summarize content for users.

To future-proof your content, design it with AX in mind. James Cadwallader (CEO, Profound) says: “SEO professionals must shift toward optimizing the Agent Experience.” Focus on clarity, machine-readable structure, and rich metadata to ensure your brand is favored in zero-click agent-driven interactions.

Use The Tool built for this exact moment in SEO history

Try Penfriend and get your first 3 articles free.

Writing LLM-Optimized Content

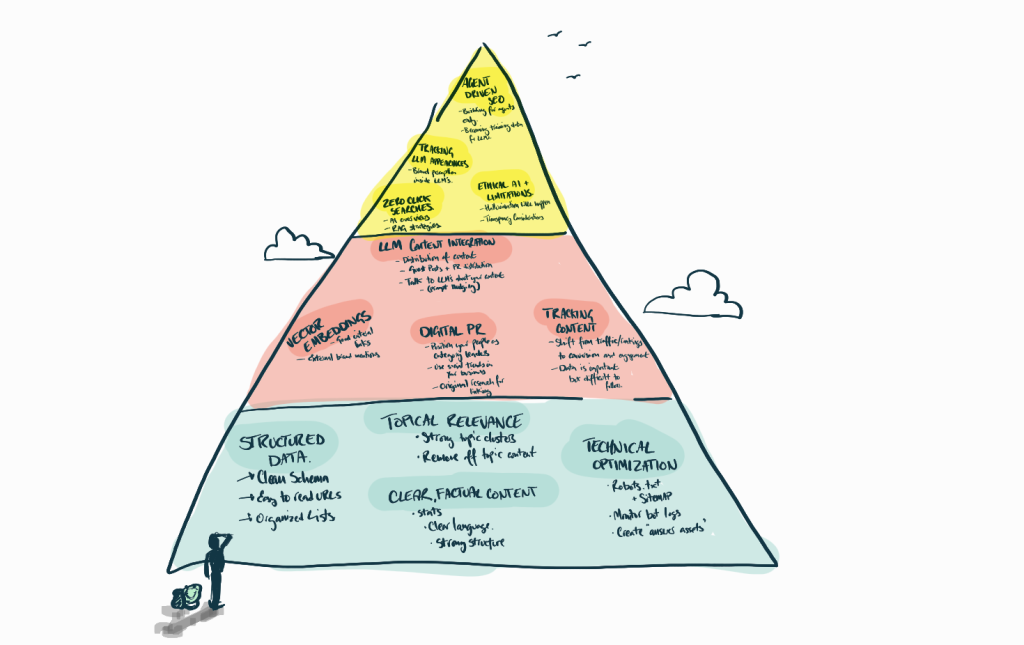

Before diving into tactical advice, it’s helpful to zoom out and understand the bigger picture of what makes content truly effective in the LLM era. Two frameworks guide our approach:

- The AI Content Success Pyramid, which organizes everything you need to know about LLM optimization into three strategic layers-content structure, authority signals, and technical infrastructure.

- The CAPE Framework for LLMO, which makes sure your content is optimized across four essential dimensions: Content, Authority, Performance, and Entity.

Together, these frameworks give you a clear mental model for building content that not only ranks in traditional SEO, but also gets surfaced in AI-generated answers and zero-click environments.

What Is the AI Content Success Pyramid?

The AI Content Success Pyramid is a visual framework that breaks down the layers of LLM optimization into three strategic tiers: Content Structure, Authority Signals, and Technical Infrastructure. It helps marketers understand what matters most when trying to get content cited by large language models (LLMs) and featured in AI-generated responses.

Each tier of the pyramid plays a crucial role:

- Foundational Layer (Content Structure & Relevance): This is the base of the pyramid, where content must be clear, well-organized, and written in a tone that LLMs favor-conversational, concise, and semantically rich.

- Authority Layer (Off-Page Signals): This middle layer focuses on building credibility across the web. It includes digital PR, backlinks from trusted sources, social proof, and presence on authoritative platforms like Wikipedia or Reddit.

- Technical Layer (Optimization Infrastructure): At the top of the pyramid is the infrastructure LLMs rely on to retrieve and understand your content. This includes schema markup, internal linking, fast performance, and bot-readable metadata.

Together, these layers form the foundation of a successful LLM content strategy, making sure your content is not only discovered and indexed, but also trusted and surfaced in AI responses.

The CAPE Framework for LLM Optimization

We use the CAPE Framework – a strategic model designed (by us) to optimize content across four key dimensions:

C = Content

- Clear, concise, conversational writing

- Chunked formatting and semantic keyword clusters

- Upfront summaries and question-based headings

A = Authority

- Topical depth and relevance

- Digital PR and media citations

- Entity consistency across platforms

P = Performance

- Schema implementation and crawlability

- Fast-loading pages, minimal JS

- Inclusion in AI Overviews and Common Crawl

E = Entity

- Strong brand-topic associations

- Optimized ‘About’ and Organization pages

- Mentioned in LLM prompts and bidirectional queries

When you combine the CAPE Framework with the AI Content Success Pyramid, you can future-proof your content with discoverability in an LLM-driven web.

Want content that’s already optimized for LLMs?

Try Penfriend and get your first 3 articles free.

Best Practices To Help Your Brand Get Cited In AI Answers.

I’ve got 10 for you. Plus some bonus ones at the end.

1. Write Conversationally

LLMs excel at processing natural language. Writing in a conversational tone makes your content more engaging and relatable, both for AI tools and users.

Implementation Tips:

- Use first-person (“we” or “you”) to make content feel personal.

- Simplify technical jargon unless your audience requires it.

- Write as though you’re explaining the topic to a friend or colleague.

- Avoid robotic or overly formal phrasing.

Penny’s example:

Before: “A website’s user interface should be designed to optimize for engagement metrics and retention.”

After: “Want users to stick around? Design your website so it’s easy to navigate and fun to explore.”

2. Use Clear Headings and Subheadings

Structured content helps LLMs break down and retrieve information more efficiently. Descriptive headings act as guides for both readers and AI, directly answering specific queries.

Implementation Tips:

- Use H2s for main topics and H3s for subtopics. For example:

- H2: “How to Optimize Content for LLMs”

- H3: “Why Clear Headings Improve LLM Results”

- Phrase headings as questions to align with user intent (e.g., “What Are the Benefits of Semantic Keywords?”).

- Ensure headings are concise, ideally under 60 characters.

Penny’s example Structure:

- H2: “How to Write for AI Tools Like ChatGPT”

- H3: “What Tone Works Best for LLMs?”

- H3: “How Do LLMs Process Subheadings?”

3. Include FAQs

FAQs are indispensable for LLM optimization. They allow you to address specific user queries directly, increasing the likelihood of your content being included in AI-generated responses.

Implementation Tips:

- Add a dedicated FAQ section at the bottom of your content or integrate questions throughout.

- Use natural language for questions (e.g., “How does LLM optimization differ from SEO?”).

- Answer each FAQ in 2–3 sentences, with links to detailed resources if needed.

- Use FAQ schema markup to improve visibility for both search engines and LLMs.

Penny’s example FAQ Format:

- Q: “What is LLM optimization?”

- A: “LLM optimization involves tailoring content to perform well in AI-driven platforms like ChatGPT by focusing on semantic relevance and concise answers.”

4. Provide Concise Answers

LLMs prioritize content that directly addresses user queries. Long-form content is still valuable for depth, but concise answers are essential for AI summaries.

Implementation Tips:

- Begin each section with a clear answer before diving into details.

- Use bullet points or numbered lists to summarize key points.

- Keep answers to 2–3 sentences whenever possible.

- Break up long paragraphs to improve readability.

Penny’s example:

Query: “What are the benefits of FAQ sections?”

Answer: “FAQs improve content scannability, address user queries directly, and boost visibility in AI-driven tools. They also enhance user experience by providing quick answers.”

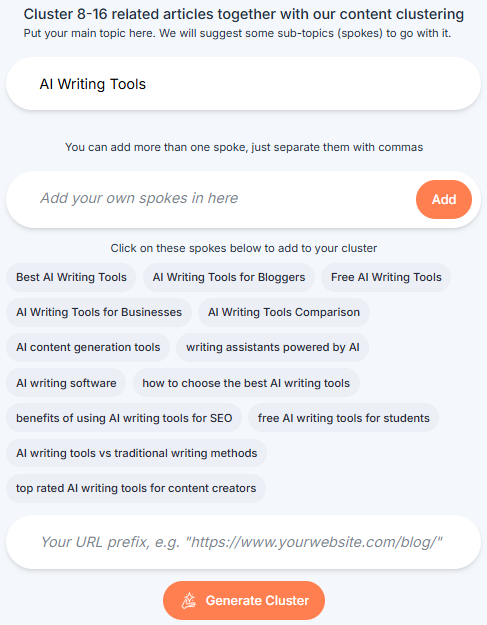

5. Incorporate Semantic Keywords

Unlike traditional SEO, LLMs don’t rely solely on exact-match keywords. They interpret semantic meaning, which means your content should include variations and related terms.

Tip: Penfriend’s Semantic Keyword Integration feature automatically clusters related terms, simplifying the process of semantic keyword optimization.

Implementation Tips:

- Identify clusters of related keywords and incorporate them naturally into your content. Penfriend’s Semantic Keyword Integration allows you to automatically incorporate clusters of related keywords that appeal to LLMs’ preference for semantic understanding

- Use tools like SEMRush or Ahrefs to find long-tail and semantic keyword variations.

- Include synonyms and alternate phrasing (e.g., “email automation” and “email workflows”).

- Avoid keyword stuffing; focus on intent over repetition.

Penny’s Keyword Cluster Example:

For the topic “AI Writing Tools,” include:

- “Generative AI tools”

- “Content automation platforms”

- “Tools for writing blogs with AI”

6. Accuracy and Credibility

LLMs prioritize reliable content, especially when generating answers for technical or factual queries. Ensuring accuracy boosts your content’s chances of being featured.

Implementation Tips:

- Cite authoritative sources, including links to studies, reports, or expert opinions.

- Regularly update evergreen content with the latest data.

- Include “Last Updated” timestamps for transparency.

- Fact-check your content thoroughly before publishing.

Penny’s example of Credible Citation:

“According to HubSpot, businesses that blog see 126% more leads than those that don’t.”

7. Use Contextually Relevant Examples

LLMs value examples that help clarify concepts for users.

Implementation Tips:

- Include analogies or scenarios that make complex topics easier to grasp.

- For technical topics, use simplified workflows or case studies.

- Relate examples to your target audience’s industry or needs.

Penny’s example:

Topic: “How LLMs Prioritize Content”

Example: “Think of LLMs like a librarian who categorizes books by subject and relevance rather than title alone.”

8. Incorporate Rich Media (Visuals and Videos)

While LLMs focus on text, including multimedia improves overall user engagement and content retention.

Implementation Tips:

- Add descriptive alt text for images to help LLMs parse their content.

- Use infographics to simplify complex information.

- Link to short, explanatory videos that enhance the topic.

Penny’s example Alt Text:

“An infographic showing the steps for LLM optimization, including keyword research, concise writing, and FAQ integration.”

9. Use Internal Linking

Internal links improve the contextual relevance of your content and help LLMs understand relationships between topics.

Implementation Tips:

- Link to related articles, guides, or tools on your website.

- Use descriptive anchor text (e.g., “Learn more about schema markup”).

10. Optimize for Multimodal LLMs

Some LLMs process text, images, and other media. Preparing for multimodal optimization can future-proof your content.

Implementation Tips:

Add metadata to non-text elements to enhance discoverability.

Include charts, graphs, or tables where relevant.

Off‑Page Authority Tactics: The Fastest Lever for LLM Visibility

Large‑language models lean heavily on entity popularity signals – mentions, links, and “cite‑worthy” data. That means classic Digital PR (earned media + thought‑leadership) now fuels both Google and ChatGPT.

A 2024 KDD study introduced Generative Engine Optimization (GEO) and benchmarked nine page‑editing tactics across 10 000 queries. The winners? Adding verifiable citations, inserting hard numbers, and improving fluency – each boosted a page’s share of word‑count‑adjusted citations by up to 41 %.

Tactics that move the needle fast

| Move | Why it Works for LLMs | Quick How‑to |

|---|---|---|

| Earn data‑driven mentions (studies, polls, benchmarks) | LLMs surface stat‑rich pages as authoritative sources | Publish one proprietary data set/quarter; pitch it to 10 industry reporters via Featured.com |

| Place expert quotes (HARO, Qwoted, Featured) | Entity co‑citation bonds your brand to target keywords | Block 1 hour/week for quote outreach; track wins in Airtable |

| Backlink‑profile depth | More relevant links = stronger entity | Target 15 new DR60+ links/quarter; measure topical overlap, not just DR |

| Reddit & UGC seeding | Many LLMs ingest Reddit dumps; real conversations build trust | Run one AMA, answer 5 niche questions/month |

| Create / polish your Wikipedia & Wikidata entry | Still the cleanest “seed” data‑set for LLM training | Follow Wiki notability rules; disclose sources |

| Add Hard Statistics | Delivers 28–40 % lift | Research stats with Perplexity, Google |

- Digital PR and Media Mentions: Publish original research or data-driven reports to attract media coverage. For example, a small SaaS startup gained industry visibility by releasing a detailed customer success study, earning multiple mentions in niche publications.

“GEO methods that inject concrete statistics lift impression scores by 28 % on average.”

arXiv, Cornell University

- Leveraging HARO and Industry Collaborations: Use Help a Reporter Out (HARO) to earn expert citations and build authority at no cost. Numerous startups have successfully boosted their visibility by providing insightful quotes and expertise in popular industry publications via HARO.

- UGC and Reddit Community Engagement: Participate authentically in Reddit forums and user-generated content (UGC) platforms. The CEO of SpaceX, Elon Musk, frequently engages with Reddit communities, building trust and brand visibility. Even smaller brands can replicate this tactic through consistent, genuine interaction.

- Establishing a Wikipedia and Wikidata Presence: Create accurate, well-sourced entries on Wikipedia and Wikidata to increase credibility and authority. A well-known technology startup saw increased citation rates in LLM-generated answers after establishing an authoritative Wikipedia page.

Relevance Engineering: A New Discipline for LLM SEO

Relevance Engineering blends principles from UX, information retrieval, and digital PR to align your content with how LLMs interpret meaning, context, and topical authority.

Here’s how to apply Relevance Engineering:

- Semantic Clustering: Group related content based on meaning rather than keyword match. Penfriend’s clustering tools help you build tightly themed content ecosystems.

- Query Expansion: Anticipate adjacent queries and incorporate variations and synonyms to broaden retrieval scope.

- Intent Mapping: Ensure each piece of content targets a specific user intent (informational, navigational, transactional) and includes LLM-friendly phrasing.

- Link Relevance, Not Just Volume: Secure backlinks that connect your brand with semantically related sources-not just high-authority domains.

- Content Fanout: Use content hubs and internal links to create multiple semantic entry points, boosting discoverability in vector-based retrieval systems.

The goal is to ensure your content isn’t just keyword-optimized-but meaning-optimized, context-aware, and engineered for LLM comprehension.

Technical Optimizations for LLMs

While content quality is vital, technical optimizations make sure your content is discoverable and usable by LLMs. Implementing these technical strategies can significantly enhance your content’s visibility and relevance in AI-driven environments.

1. Schema Markup

Schema markup is structured data that helps search engines and LLMs interpret your content. For LLMs, schema markup provides critical context and organizes your content for efficient retrieval.

Key Types of Schema for LLMs:

- FAQ Schema: Highlights questions and answers for LLMs, increasing their chances of inclusion in conversational queries.

- How-To Schema: Guides users step-by-step, perfect for action-oriented queries.

- Article Schema: Provides metadata like the article title, author, and publication date, signaling credibility and relevance.

Do LLMs read structured data?

Evidence is mixed. Recent tests show many AI crawlers skip JSON‑LD that’s injected via JavaScript :contentReference[oaicite:6]{index=6}, while some experts still advocate full markup :contentReference[oaicite:7]{index=7}.

Our stance: keep Schema.org for Google Rich Results, but also render key answers and FAQs directly in the HTML so LLMs can parse them in plain text.

Implementation Tips:

- Use tools like Google’s Structured Data Markup Helper or plugins like RankMath to add schema to your content.

- Validate your schema markup with Google’s Rich Results Test to ensure it’s correctly implemented.

- Avoid schema overloading; use only relevant types to maintain clarity.

Example FAQ Schema Code:

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [

{

"@type": "Question",

"name": "What is LLM Optimization?",

"acceptedAnswer": {

"@type": "Answer",

"text": "LLM optimization involves tailoring content to rank well in AI-driven platforms like ChatGPT by focusing on semantic relevance and concise answers."

}

}

]

}

2. Metadata Optimization

Metadata (titles, descriptions, and headers) acts as a roadmap for LLMs, summarizing the content’s purpose and scope. Well-optimized metadata improves the chances of your content being included in AI-generated responses.

Best Practices for Metadata:

- Meta Titles: Ensure they are concise (50–60 characters) and include semantic keywords.

- Meta Descriptions: Summarize the content in 155–160 characters. Focus on user intent and include secondary keywords naturally.

- Headers (H1, H2, etc.): Use descriptive, question-based headers to improve semantic clarity.

Implementation Tips:

- Include target keywords and synonyms in meta titles and descriptions without keyword stuffing.

- Use SERP simulation tools to preview how your metadata appears in search results.

- Regularly review and update metadata to align with evolving queries and trends.

Example Metadata:

- Meta Title: “What is LLM Optimization? A Beginner’s Guide to AI Content Success”

- Meta Description: “Learn how to optimize your content for LLMs like ChatGPT with tips on schema, metadata, and concise answers.”

3. Internal Linking

Internal links create a content hierarchy that helps LLMs understand the relationships between pages on your website. They improve discoverability and provide context for AI tools processing your content.

Best Practices for Internal Linking:

- Link to cornerstone content (e.g., hub pages or detailed guides).

- Use descriptive anchor text that reflects the destination page’s content (e.g., “Read about schema markup best practices” instead of “Click here”).

- Limit the number of links per page to maintain clarity and avoid overwhelming users.

Implementation Tips:

- Use tools like Screaming Frog to audit and optimize your internal linking structure.

- Add links to related FAQs or sections within your content.

- Use breadcrumb navigation to enhance contextual relevance for LLMs.

Example Internal Link:

“If you’re new to LLMs, check out our comprehensive guide on LLM optimization basics.”

4. Regular Updates

Fresh, accurate information is a priority for LLMs, which are trained on vast datasets and often reference real-time sources. Regular updates to your content keep it relevant and useful for both users and AI.

Content to Update:

- Evergreen Content: Add recent statistics, trends, or developments to maintain relevance.

- FAQs: Review and expand FAQs based on evolving user queries.

- Outdated Information: Remove or revise content that’s no longer valid.

Implementation Tips:

- Schedule periodic audits to identify and update outdated content.

- Highlight recent updates with a “Last Updated” date on the page.

- Use tools like ContentKing or Google Search Console to monitor content performance and identify opportunities for improvement.

Example Update Format:

“This guide was last updated in December 2024 to include the latest trends in LLM optimization.”

5. Optimize for Fast Loading Speeds

Loading speed is critical for user experience and impacts how LLMs process your content. Slow pages risk being deprioritized.

Implementation Tips:

- Compress images using tools like TinyPNG or ImageOptim.

- Enable browser caching and minimize JavaScript with plugins like WP Rocket.

- Use Google’s PageSpeed Insights to identify bottlenecks and improve load times.

6. Add Descriptive Alt Text to Visuals

LLMs process text more efficiently than visuals, but descriptive alt text allows them to “understand” images in your content.

Implementation Tips:

- Use concise descriptions of the image’s purpose or content.

- Include keywords naturally but avoid keyword stuffing.

Example Alt Text:

“An infographic showing the steps for optimizing content for LLMs, including schema, concise writing, and metadata updates.”

7. Enable HTTPS and Mobile Optimization

LLMs prefer secure, mobile-friendly content, as it aligns with broader user expectations.

Implementation Tips:

- Ensure your website uses HTTPS to improve security and trustworthiness.

- Use responsive design to optimize content for all screen sizes.

Integrate these technical optimizations, and your content has a higher chance of not only performing better in traditional SEO, but also aligning with the specific requirements of LLM-driven search and answer.

8. Getting Into the LLM Corpus

To appear in LLM-generated answers, your content needs to be present in the data sources these models are trained on – most commonly, Common Crawl. Not all LLMs rely exclusively on it, but it’s a major training source, especially for open-source models.

Topical relevance > domain authority. Getting into Common Crawl + demonstrating page-level originality are critical for inclusion in LLM outputs.

Here are several ways to boost your chances of corpus inclusion:

- Publish on Crawl-Indexed Domains: Platforms like Medium, WhitePress, and PR Newswire are regularly scraped by Common Crawl. Syndicating content there increases visibility.

- Optimize Branded Assets: Ensure your About pages, blog posts, and FAQs are crawlable and indexable. Include structured data like

Organization,Article, andFAQPageschema. - Get Indexed in Bing: Since Bing powers models like GPT-4 and Copilot, appearing in Bing SERPs increases your retrieval potential.

- Nudge LLMs About Your Content: Engage directly with tools like ChatGPT by referencing your own articles. This can create feedback loops that increase perceived authority.

- Check Inclusion: Use tools like Gemini or SerpAPI to check if your content is cited, linked, or referenced in AI-generated answers.

9. Optimizing for AI Overviews and RAG

With the rise of AI Overviews (AIOs) in Google, powered by Retrieval-Augmented Generation (or RAG), LLMs increasingly extract semantically chunked passages from content to form synthetic answers. To optimize for this:

- Break content into logical, well-labeled chunks

- Use structured subheadings and paragraph-level clarity

- Ensure stats, comparisons, and summaries are clearly delineated

New Insight: Mike King, CEO and founder of iPullRank, reported that approximately 20–30% of Google queries now trigger AI Overviews. These are powered by RAG, meaning your content must be chunked semantically and formatted for snippet retrieval.

The more logically segmented your content, the easier it is for LLMs to extract and cite specific passages in AIOs.

How LLMs Perceive Your Brand: Entity Mapping and Attention Hotspots

Large Language Models don’t just evaluate pages, they form conceptual relationships between brands, topics, and entities over time. These associations affect whether and how your brand appears in AI-generated answers.

To influence these associations, focus on entity optimization:

- Strengthen Brand–Topic Links: Use consistent schema markup (

Organization,Person,Product) and naturally mention your brand alongside the key terms you want it associated with. - Optimize Your ‘About’ Page: Include a clear mission, services, and team bios with links to social proof or media mentions.

- Use Structured Data: Reinforce brand relationships with structured data that clearly connects your organization to niche topics, industries, or people.

- Monitor Attention Hotspots: Use LLM feedback tools and bidirectional querying (e.g., “What does ChatGPT know about [Your Brand]?”) to evaluate how your brand is perceived and where it shows up in relation to competitors.

- Create Contextual Mentions Across the Web: Earn links and citations from reputable sources that reference both your brand and your core topic clusters.

Measuring Success in the LLM Era

Traditional SEO metrics like keyword rankings and CTRs are no longer the most important indicators of content performance. In an AI-first landscape, content marketers must evolve their measurement frameworks.

Key Metrics to Track:

- AI Referrals:

Track traffic coming from AI tools like ChatGPT or Bing AI using referral paths in Google Analytics.

Recent industry research from Gartner indicates that by 2028, as much as 50% of search traffic may be diverted from traditional search engines to AI-driven conversational interfaces. As the reliance on LLM-generated answers increases, optimizing your content specifically for AI visibility becomes crucial.

2. Query Match Rates:

Use tools like ChatGPT or Gemini to test how often your content is featured for specific queries.

3. Engagement Metrics:

Analyze bounce rate, time on page, and scroll depth to understand how users interact with your content.

4. Keyword Rankings in AI Context:

Monitor your rankings for semantic keywords relevant to LLM queries.

5. LLM Inclusion Rate: How often your content is cited or summarized by LLMs (e.g., in ChatGPT, Bing Copilot, or Google AI Overviews).

Penny’s Pro Tip:

Create a spreadsheet of target queries, test them monthly in AI tools, and document if and how your content appears in the answers. Adjust your strategy based on results.

6. AI Overview Visibility: Whether your content appears in RAG-powered features like Google’s AI Overviews.

7. Corpus Presence: Whether your content appears in known LLM training sets (like Common Crawl or Bing Index).

8. Topical Vector Coverage: How well your site clusters around core themes and semantic topics.

9. Brand–Entity Strength: Whether LLMs associate your brand clearly with key concepts.

Tools like Gemini, Poe, and Penfriend’s internal monitoring features can help assess these signals.

Real-World Examples of LLM-Optimized Content

Case Study 1: SaaS Company Boosts Visibility with FAQ Optimization

Challenge:

A SaaS company struggled with visibility in generative AI tools like ChatGPT, even though their blog performed well on Google.

Solution:

- Added a detailed FAQ section to each blog post, addressing long-tail keywords derived from customer queries.

- Rewrote content with a conversational tone to appeal to AI-driven answers.

- Implemented FAQ schema markup to structure the content.

Results:

Featured in ChatGPT responses for over 15 relevant queries.

35% increase in referrals from generative AI platforms.

Case Study 2: E-commerce Brand Dominates AI Product Recommendations

Challenge:

An e-commerce retailer wanted to appear in AI-driven product suggestions for “best running shoes.”

Solution:

- Created concise product descriptions with semantic keywords like “comfortable,” “durable,” and “affordable.”

- Added a robust FAQ section addressing sizing, shipping, and care tips.

- Included citations and links to verified customer reviews.

Results:

Increased direct traffic by 20% through generative AI referrals.

Featured in multiple AI-generated product recommendation lists.

The Future of SEO is LLMO

The Harvard Business Review has suggested that traditional SEO roles are evolving so significantly that, soon, “SEOs will be known as LLMOs”. This highlights the critical need to adapt and embrace new optimization techniques tailored specifically for large language models.

Checklist: 7 Steps to Create LLMO Content

We wanted a checklist for ourselves on how to create LLM-Optimized content for our own business and clients, so we did. Here’s a re-cap of it for a quick skim, but you can also download the FULL checklist below if you want our entire process and streamline your content optimization process:

- Research Semantic Keywords and User Intent

- Use tools like Penfriend, SEMrush, Frase.io, and AnswerThePublic to find semantic keywords and trending questions.

- Group keywords into clusters for structured content planning.

- Test queries on ChatGPT or Gemini to ensure alignment with user intent.

- Write Concisely and Conversationally

- Answer questions in 2–3 sentences before elaborating.

- Simplify technical jargon for better readability.

- Break up longer sections with pull quotes and inline headers.

- Use a conversational tone to match LLMs’ preferences.

- Structure Your Content for LLM Retrieval

- Use H2s and H3s with question-based headings for clarity.

- Incorporate bullet points, numbered lists, and tables to enhance scannability.

- Maintain a consistent formatting style for better user experience.

- Chunk your content logically to fit RAG and AI Overview formatting.

- Add Schema Markup

- Implement FAQ and How-To schema to improve content visibility.

- Use tools like Google’s Structured Data Markup Helper, RankMath, or Yoast SEO for schema generation.

- Validate schema with Google’s Rich Results Test and update periodically.

- Ensure Accuracy and Credibility

- Cite authoritative sources and include timestamps to signal freshness.

- Regularly update evergreen content with current trends and data.

- Add schema-supported citations and keep evergreen pages up-to-date.

- Audit content for outdated information and refresh as needed.

- Nudge LLMs and Track Corpus Inclusion

- Mention and share your own content when prompting ChatGPT or Copilot.

- Publish or repurpose content to crawl-indexed domains like Medium and PR Newswire.

- Track inclusion via Gemini, Poe, and prompt feedback.

- Test Content on LLMs Before Publishing

- Input target queries into ChatGPT or Gemini to test alignment and relevance.

- Evaluate responses for accuracy, clarity, and coverage of user intent.

- Test multiple query variations to refine phrasing and structure.

- Monitor and Refine Based on Metrics

- Track key performance indicators like AI referrals, bounce rates, and engagement metrics.

- Track topical cluster strength and vector coverage.

- Use tools like Google Analytics and Search Console to identify opportunities for improvement.

- Document trends and adjust content strategies accordingly.

LLMO When You Don’t Have a Household Name

- Narrow topical moat: own one micro‑niche (e.g., “server‑side SEO automation”) with 20+ deeply interlinked articles.

- Leverage partner authority: co‑publish with larger SaaS, borrow their brand age/backlinks.

- Piggyback on directories & comparative content: “{BigTool} alternative” pages rank fast and often get cited by LLMs.

- Run entity audits monthly (ChatGPT “Why didn’t you recommend us?” prompt) to spot gaps quickly.

Ready to future-proof your content strategy?

Download our free checklist and follow these 7 steps to create content that ranks in both AI-driven and traditional search environments.

Tools for LLM Optimization

Leveraging the right tools can streamline your LLM optimization efforts, helping you craft content that aligns with both SEO and AI-driven search requirements. Below are some of the best tools for optimizing content for LLMs, with actionable tips for implementation.

1. Penfriend

What It Does:

Penfriend specializes in generating SEO and LLM-optimized content at scale. It mimics your unique tone and style, allowing businesses to produce personalized, high-quality content efficiently.

How to Use It for LLM Optimization:

- Echo Feature: Use Penfriend’s Echo feature to define and replicate your writing style for consistency across all content.

- Templates for Optimization: Leverage its pre-designed templates (e.g., FAQs, “How-To” guides) to create structured, LLM-friendly content.

- Semantic Keyword Integration: Add clusters of related semantic keywords for better alignment with LLM preferences.

Pro Tip:

Use Penfriend to create content specifically tailored for your audience, such as product descriptions, FAQs, or industry-specific articles. Test the output by running it through ChatGPT or Gemini to see how it performs in AI responses.

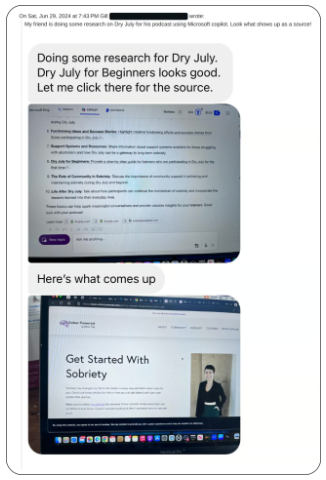

One of our clients, Gill at Soberpowered, used Penfriend for building her blog, and started showing up in LLM answers. One of her clients reached out excited to let her know how they found her!

Penfriend’s AI Optimization Success

To demonstrate the effectiveness of LLM optimization, we recently updated several blog articles to incorporate fresh statistics, clear FAQs, and structured schema markup. Within weeks, these articles began appearing prominently in Bing Chat responses and ChatGPT answers, significantly boosting organic visibility and brand authority. We’ve definitely experienced a tangible impact to our strategic LLM optimization tactics.

2. ChatGPT and Gemini

What They Do:

ChatGPT and Gemini are not only conversational AI platforms but also invaluable tools for testing your content’s alignment with user queries and LLM behavior.

How to Use Them for LLM Optimization:

- Query Testing: Input your target keywords or user queries to see how the AI generates responses. Adjust your content to align with how the LLM interprets the query.

- Competitor Benchmarking: Analyze competitor content by asking the LLMs similar questions and identifying gaps in their responses.

- Simulate User Queries: Use conversational prompts to refine the tone and format of your content. For example:

- Query: “How can I optimize content for AI tools?”

- Expected Response: Summarized steps based on your content.

Pro Tip:

Ask the AI to critique your content (e.g., “How can this FAQ be improved for readability and relevance?”). Use the feedback to refine your approach.

3. Ahrefs, SEMRush, and AnswerThePublic

What They Do:

These tools are essential for keyword research and uncovering trending questions and topics that align with user intent and LLM optimization.

How to Use Them for LLM Optimization:

- Ahrefs/SEMRush:

- Identify clusters of long-tail and semantic keywords related to your niche.

- Analyze competitor pages that rank highly for your target queries.

- Track performance metrics (e.g., organic traffic, backlink profiles) to assess content effectiveness.

- AnswerThePublic:

- Discover real-world questions users are asking about your topic.

- Use the “Questions” and “Comparisons” sections to uncover conversational queries (e.g., “How to,” “Why,” “Is X better than Y?”).

Pro Tip:

Use these tools together: Combine SEMRush’s keyword gap analysis with AnswerThePublic’s trending queries to build a comprehensive list of questions to address in your content.

4. Frase.io

What It Does:

Frase.io is a content optimization tool designed for creating and refining content that aligns with both search engines and LLMs.

How to Use It for LLM Optimization:

- Topic Modeling: Identify subtopics and clusters that LLMs prioritize based on user queries.

- Content Scoring: Evaluate how well your content aligns with specific keywords and semantic intent.

- Answer Optimization: Generate concise answers for FAQs or snippet-friendly content directly within the platform.

5. Grammarly and Hemingway Editor

What They Do:

These tools ensure your content is clear, concise, and free of grammatical errors-key factors for LLM readability.

How to Use Them for LLM Optimization:

- Grammarly: Use it to adjust tone and fix grammatical errors. The tool’s “Clarity” and “Engagement” scores help ensure your writing is conversational and impactful.

- Hemingway Editor: Simplify complex sentences and identify overly dense paragraphs to make content more digestible for LLMs.

6. Google’s Structured Data Markup Helper

What It Does:

Helps you create and implement structured data (schema markup) to make your content more discoverable by LLMs and search engines.

How to Use It for LLM Optimization:

- Select the schema type (e.g., FAQ, How-To) that matches your content.

- Generate the JSON-LD code and embed it on your web page.

- Test the schema with Google’s Rich Results Test to ensure correct implementation.

Pro Tip:

Focus on adding FAQ and How-To schemas to boost your content’s visibility in LLM-generated answers.

7. Screaming Frog

What It Does:

A powerful website crawler that audits your website for SEO and technical issues.

How to Use It for LLM Optimization:

- Internal Linking Audit: Identify gaps in your linking structure and add relevant internal links.

- Metadata Review: Check for missing or poorly optimized meta titles and descriptions.

- Broken Links: Fix broken links to improve the crawlability of your content.

Pro Tip:

Use Screaming Frog to ensure all content is accessible and optimized for LLMs.

8. Google Analytics and Search Console

What They Do:

These tools track performance metrics, helping you refine content strategies for LLMs.

How to Use Them for LLM Optimization:

- Google Analytics: Monitor user behavior metrics like bounce rate, session duration, and conversion rates.

- Search Console: Identify search queries that drive traffic and uncover new opportunities to address user intent.

Pro Tip:

Use Search Console’s “Performance” report to find high-impression queries where your content isn’t ranking and optimize accordingly.

How to Combine These Tools

For optimal results, use a workflow that integrates multiple tools:

- Research Intent: Start with AnswerThePublic and SEMRush to uncover questions and keywords.

- Write and Optimize: Create content with Penfriend, refine it with Grammarly, and structure it with schema using Google’s Structured Data Helper.

- Test and Analyze: Test queries in ChatGPT/Gemini and analyze performance with Google Analytics and Search Console.

- Iterate: Use tools like Screaming Frog to audit and refine existing content.

Using these tools strategically will help you create content that aligns with both traditional SEO and LLM-driven optimization strategies.

Common Mistakes to Avoid

Even with the best tools and strategies, certain pitfalls can undermine your content’s performance in LLM-driven search. Avoid these common mistakes to maximize your optimization efforts:

Keyword Stuffing

Over-relying on exact-match keywords in an attempt to rank higher, often at the expense of readability and user experience.

Why It’s a Problem:

LLMs prioritize semantic understanding and user intent over repetitive keywords. Keyword stuffing can make your content feel unnatural and decrease its effectiveness in AI responses. Simply put, keyword‑stuffing under‑performs and risks brand‑trust.

A GEO study found that keyword‑stuffing actually reduces LLM visibility (‑10 % vs. no‑op).

arXiv, Cornell University

How to Avoid It:

- Focus on semantic keywords and related phrases instead of repeating the same keyword.

- Use tools like SEMRush to find variations and synonyms that align with user intent.

- Write naturally, ensuring that keywords fit seamlessly into the content.

Our example:

- Avoid: “AI writing tools help optimize content for AI. AI writing tools are important for AI content optimization.”

- Better: “AI writing tools can help you create optimized content tailored to AI-driven platforms like ChatGPT.”

Outdated Content

Failing to update evergreen content with current data, trends, or developments.

Why It’s a Problem:

LLMs value fresh, accurate information. Outdated content may be deprioritized in favor of more recent, relevant sources.

How to Avoid It:

- Schedule regular content audits to identify outdated sections.

- Update evergreen content with new statistics, examples, or trends.

- Add a “Last Updated” timestamp to signal freshness to users and AI tools.

Here’s an example:

- Update phrases like “Emerging AI tools include ChatGPT” to “Leading AI tools in 2024 include ChatGPT and Gemini.”

Overloading Content

Including overly complex or redundant information that makes your content difficult to process.

Why It’s a Problem:

LLMs prefer concise, clear content. Overloading your page with unnecessary details can confuse the AI and dilute the key message.

How to Avoid It:

- Use bullet points, numbered lists, and subheadings to organize content.

- Break up long paragraphs into digestible chunks.

- Prioritize the most relevant information for your audience and AI tools.

Compare these examples:

- Avoid: A 500-word paragraph detailing every technical aspect of schema markup.

- Better: A concise explanation followed by a bulleted list of steps to implement schema.

Neglecting Schema

Failing to add structured data like FAQ or How-To schema to your content.

Why It’s a Problem:

Schema markup helps LLMs and search engines understand your content’s structure and purpose. Without it, your content may be overlooked in favor of schema-enabled competitors.

How to Avoid It:

- Use tools like Google’s Structured Data Markup Helper to create schema for FAQs, articles, and more.

- Test your schema with Google’s Rich Results Test to ensure proper implementation.

- Regularly update and expand schema to align with new content.

Example:

- Add FAQ schema for questions like “What is LLM optimization?” and “How does schema help with AI search?“

What’s Next?

The rise of LLMs has transformed how content is created, optimized, and consumed. As search shifts from “explore” to “answer,” understanding the nuances of LLM optimization is critical for staying competitive in an AI-driven world.

When you focus on conversational, structured, and credible content, and combine these efforts with robust technical optimizations, you can position your content to perform well in both traditional SEO and AI-driven search.

Key Takeaways:

- LLMs favor concise, direct answers, always provide a summary up top.

- Freshness and accuracy are critical. Update content regularly and cite authoritative sources.

- Structure (headings, FAQs, schema) helps both search engines and LLMs digest your content effectively.

- Building your brand’s presence online (mentions, Reddit, Wikipedia) significantly increases your chances of appearing in AI-generated answers.

- Engagement signals enhance relevance. User engagement metrics like dwell time and interaction rates signal quality to LLMs and improve your content’s chances of being cited.

- Don’t abandon traditional SEO. High-ranking content remains more likely to be seen and cited by LLMs.

- Optimize for agents (AX), not just users – structured data, semantic URLs, and listicles are key.

- Format content for AI Overviews. Chunk content semantically and clearly.

As the search landscape evolves, those who adapt their strategies to cater to both users and LLMs will lead the way. Ready to take your content to the next level? Start implementing these strategies today and watch your content thrive in the era of AI-powered search.

Ready to Optimize Your Content For LLMs?

Download our free checklist: 7 Steps to Create LLM-Optimized Content and follow the steps to create content that ranks in both AI-driven and traditional search environments.

This guide was written by Inge von Aulock, and edited by Tim Hanson, at Penfriend.ai. Penfriend specializes in AI-driven content optimization, helping content teams efficiently adapt and thrive in the era of LLM-driven search.