As large language models reshape how people discover, evaluate, and trust content online, marketers need a new playbook. Traditional SEO frameworks weren’t built for an AI-first world, where authority isn’t just about backlinks, performance means more than load speed, and brands must be understood as entities, not just websites.

The CAPE Framework is the strategic system we use for optimizing content visibility in AI search.

That’s why we adapted and enhanced the CAPE Framework: a modern, strategic system for optimizing content across the four pillars that matter most in LLM-driven search – Content, Authority, Performance, and Entity. Whether you’re writing blog posts, building category pages, or scaling a content engine, CAPE helps ensure your work is built for visibility, trust, and retrieval in the age of AI.

How’d you find this article?

Google? LLM?

Thought so.

Funny, isn’t it?

You’re reading an article on “how to rank in LLMs”

…because it ranked in an LLM.

I wrote it using Penfriend.ai.

You can too.

What Is the CAPE Framework?

The adapted CAPE Framework is Penfriend’s strategic model for LLM optimization. It breaks down the components of AI search success into four focus areas:

- Content: Conversational, structured, semantically rich writing.

- Authority: Digital PR, topical relevance, and entity alignment.

- Performance: Schema markup, crawlability, and AI retrievability.

- Entity: Brand perception, structured data, and LLM associations.

Together, these four pillars form the foundation of content that not only performs well in search but also gets surfaced in AI-generated responses across platforms like ChatGPT, Bing, Gemini, and Claude.

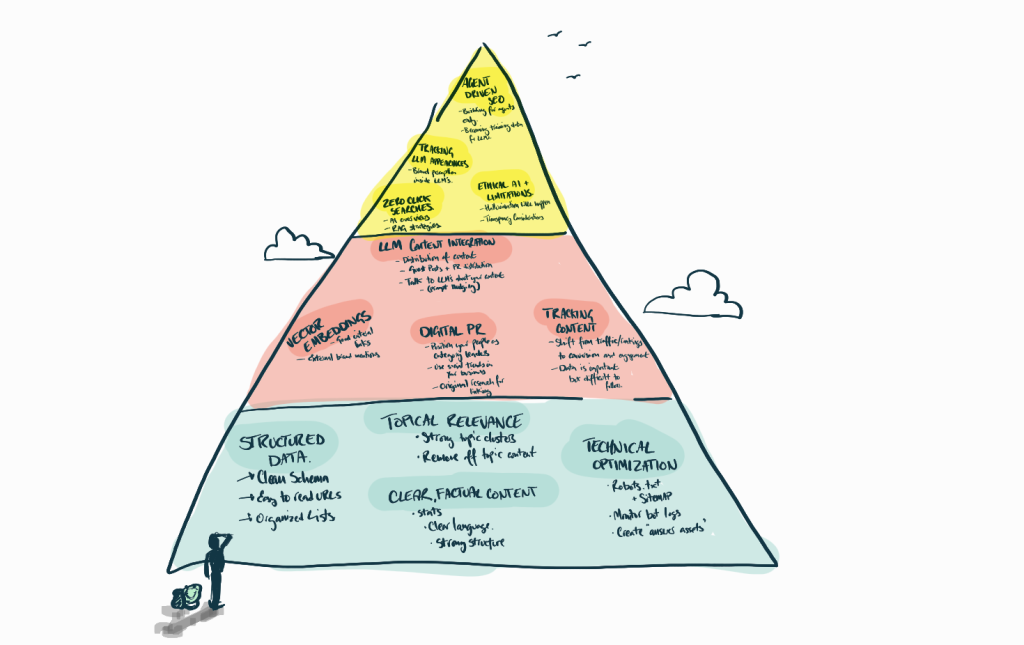

But to fully understand CAPE, it’s helpful to see how it connects to a broader structure. That’s where the AI Content Success Pyramid comes in. It’s a three-tiered framework that organizes everything LLMs value into strategic layers:

- Content Structure (base layer)

- Authority Signals (middle layer)

- Technical Infrastructure (top layer)

Each pillar of CAPE maps directly to a layer of the pyramid:

- C = Content lives in the foundational layer because LLMs favor semantically rich, structured writing.

- A = Authority corresponds to the middle layer – social proof, citations, and topical trust.

- P = Performance and E = Entity make up the top layer. This is your infrastructure, schema, and how machines understand who you are.

Together, CAPE and the Pyramid give you a strategic, layered approach to crafting content that performs in the AI-first era.

C = Content

In the world of LLM optimization, content is still king, but not in the way traditional SEO taught us. Large language models prioritize content that is concise, conversational, and semantically rich. The goal isn’t to game an algorithm; it’s to be understood and cited by intelligent agents trained on massive text corpora.

Key Principles for LLM-Optimized Content:

- Lead with Answers: LLMs prefer content that starts with the answer. Always summarize key points in the first few lines of a section.

- Write Conversationally: Avoid robotic or keyword-stuffed phrasing. Write as if you’re speaking to a curious but intelligent human.

- Use Semantic Keywords: Rather than relying on exact-match terms, use clusters of related phrases that map to the user’s intent.

- Chunk Your Content: Use clear subheadings, bullet points, and numbered lists to make it easier for LLMs to extract relevant snippets.

- Include FAQs: LLMs often reference FAQ sections when answering questions, especially in AI Overviews and zero-click results.

Example Before/After (Tone Shift):

Before: “AI content tools are revolutionizing the way content is produced and distributed across digital ecosystems.”

After: “AI writing tools help you create blogs, guides, and FAQs faster. They save time without sacrificing quality.”

Simple. Direct. And exactly how LLMs like it.

Want content that’s already optimized for LLMs?

Try Penfriend and get your first 3 articles free.

A = Authority

Authority isn’t just about who you are, it’s about who trusts you, who cites you, and how frequently you’re mentioned in the broader conversation. In the LLM era, large language models evaluate authority through a mix of entity recognition, co-citations, and factual reliability.

Key Ways to Build Authority for LLMs:

- Earn Mentions and Links from Reputable Sources: Get quoted in industry publications, contribute expert commentary via platforms like HARO or Featured, and publish original research that others cite.

- Be Present in Trusted Data Sources: Wikipedia, Wikidata, and Reddit are all known to be indexed in LLM training sets. If you’re missing from these sources, you’re missing authority.

- Co-Citation is the New Backlink: It’s not just about having a link-it’s about who else is mentioned near you. Being cited in the same breath as credible sources boosts your brand’s perceived expertise.

- Quote and Cite Credible Experts: Adding verifiable sources, stats, and attribution in your content helps LLMs recognize your content as trustworthy and accurate.

- Own Your Topical Niche: Publish a dense cluster of content around one subject to build semantic depth. When LLMs see 20+ related articles all supporting the same theme, you become a topical authority.

Example Authority Move:

Instead of: “Our software saves time.”

Try: “According to a 2024 study published in Martech Today, automation tools like ours can save teams up to 7 hours per week.”

This helps LLMs associate your content with trustworthy, citable information.

P = Performance

Performance in the CAPE Framework isn’t just about how fast your site loads-it’s about how well your content can be parsed, retrieved, and trusted by AI systems. LLMs rely on structured, readable, and semantically rich HTML to extract accurate responses, which means technical SEO matters more than ever.

How to Optimize for Performance in an LLM Context:

- Implement Schema Markup: Add structured data for FAQs, How-Tos, Articles, and Organization info. This helps LLMs understand the role and purpose of your content.

- Minimize JavaScript Dependencies: Many LLM crawlers don’t render JavaScript. Keep your critical content and schema inline in plain HTML.

- Chunk Content Logically: Break long content into clearly labeled sections so retrieval systems can extract meaningful chunks.

- Optimize Metadata: Write titles and descriptions that summarize the answer clearly. This makes your content more likely to show up in featured snippets and AI Overviews.

- Maintain Fast Load Speeds: While less relevant to LLMs directly, fast load speeds are still a user signal for quality. Tools like Google PageSpeed Insights can help.

Penny’s Bonus Tip:

Add descriptive alt text to visuals and infographics. Multimodal LLMs (like Gemini or GPT-4o) use these cues to contextualize visual data.

E = Entity

LLMs don’t just read your content, they learn who you are. They form mental maps of your brand and its relationship to topics, keywords, and industries. That means Entity Optimization is no longer a technical detail, it’s the heart of modern digital strategy.

How to Strengthen Your Entity Signals:

- Own Your About Page: Your About, Team, and Product pages should clearly communicate who you are, what you do, and where you’re cited. Use proper schema (

Organization,Person,Product). - Get Cited in Authoritative Places: Mentions in Wikipedia, Wikidata, Crunchbase, and news articles help define your entity profile in LLM training data.

- Align Consistently Across the Web: Make sure your bios, descriptions, and NAP (name, address, phone) info match everywhere. Inconsistencies weaken trust.

- Use Structured Data to Define Relationships: Explicitly define how your people, products, and services are related with

sameAs,knows, oraffiliationmarkup. - Prompt the LLMs: Ask ChatGPT or Gemini, “What do you know about [Brand]?” Regularly audit what they say and fill the gaps with optimized content or citations.

Example Entity Move:

If you want to be associated with “LLM Optimization,” create:

- 10+ semantically linked blogs on the topic

- A dedicated hub page

- An optimized About page with schema

- Guest posts or quotes in trusted publications using that phrase

All of it teaches the model: You = authority on this subject.

How to Apply the CAPE Framework in Your Content Workflow

The CAPE Framework isn’t just a theory, it’s a practical system for building content that gets found, trusted, and cited by large language models.

If you’re brand new to LLM optimization or want a deeper dive into the big picture, we’ve published a massive, practical resource: Optimizing Content for LLMs: Strategies to Rank in AI-Driven Search. It’s our comprehensive guide to everything LLMO, including semantic search, RAG optimization, and zero-click visibility.

Optimizing Content for LLMs: Strategies to Rank in AI-Driven Search

Here’s a quick step-by-step on how to bring each pillar into your real-world content workflow:

Step 1: Plan with CAPE in Mind

- Start your brief with CAPE criteria baked in: What’s the target entity? What’s the performance schema? What authority will support this?

- Use a CAPE-aligned checklist to guide writing, editing, and publishing.

Step 2: Write with “C” and “A” First

- Lead with strong, clear answers and use natural, human tone.

- Layer in expert quotes, stats, and relevant third-party links to build authority as you go.

Step 3: Optimize for “P” Before Publishing

- Add structured data using tools like RankMath, Yoast, or Google’s Markup Helper.

- Double-check schema, metadata, load speed, and mobile rendering.

Step 4: Strengthen “E” with Entity Signals

- Make sure your About page, author bio, and product pages are optimized with schema and consistent naming.

- Publish content clusters around your target topic to establish your brand as an entity worth referencing.

Step 5: Test and Refine

- Use ChatGPT, Gemini, and Perplexity to search target queries. Ask, “Would this content be cited by an LLM?”

- Refine based on feedback or gaps in entity recall.

When you implement CAPE at every step, from brief to publishing, you’re no longer just creating content. You’re shaping how the AI-powered web sees, understands, and trusts your brand.

CAPE Is Your AI-Era Content Advantage

As AI continues to reshape how content is discovered and distributed, frameworks like CAPE are no longer optional, they’re essential. By aligning your strategy around Content, Authority, Performance, and Entity, you’re building content that’s not only visible to search engines but meaningful to the AI systems shaping user behavior.

This isn’t just about rankings. It’s about credibility. Visibility. And long-term digital equity.

- Want to future-proof your brand?

- Want to show up in ChatGPT, Gemini, or Bing?

- Want to create content that gets cited-not just clicked?

Start using CAPE today.

Or, better yet: Try Penfriend.